NLP记录

Overview

最近有一个项目想做chat bot,也就是在IM app上自动回答问题的机器人。要回答问题,就要对问题有所了解,于是最近看了一些NLP (Natural Language Process)方面的资料。没有过多的涉及基础理论以及模型方面的知识。因为是做项目,更多的是希望能从工程方面直接进行应用。本文主要涉及SyntaxNet和NLTK。前者是Google于2016年开源的NLP项目,其包含了基本模型以及基于TensorFlow的实现。而且其基于若干训练资料,已经有一个pre-trained English model。可以直接被加以利用。

SyntaxNet

安装

一些参考网页

- NLP初级选手ubuntu 下安装google SyntaxNet

- How to Install and Use SyntaxNet and Parsey McParseface

- SyntaxNet Tutorial

- SyntaxNet: Understanding the Parser

安装完成后,运行一个demo程序如下:

echo 'Bob brought the pizza to Alice.' | syntaxnet/demo.sh

结果是一个树状结构

Input: Bob brought the pizza to Alice .

Parse:

brought VBD ROOT

+-- Bob NNP nsubj

+-- pizza NN dobj

| +-- the DT det

+-- to IN prep

| +-- Alice NNP pobj

+-- . . punct

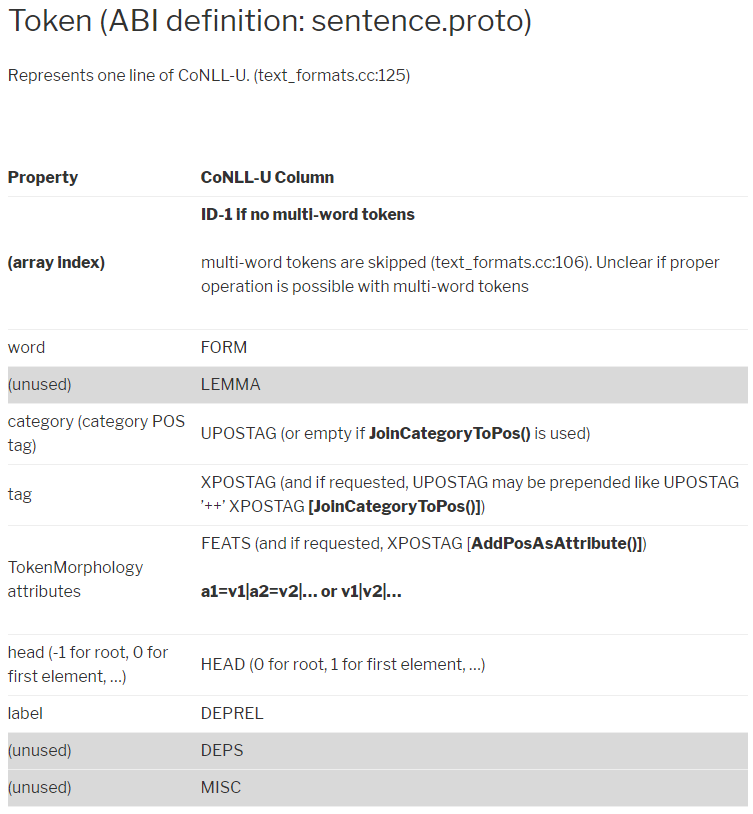

SyntaxNet自带的pre-trained English parser叫Parsey McParseface。我们可以用这个parser来分析语句。根据How to Install and Use SyntaxNet and Parsey McParseface中所述,Parsey McParseface输出实为CoNLL table。这个table的格式在models/syntaxnet/syntaxnet/text_formats.cc,如下:

50 // CoNLL document format reader for dependency annotated corpora.

51 // The expected format is described e.g. at http://ilk.uvt.nl/conll/#dataformat

52 //

53 // Data should adhere to the following rules:

54 // - Data files contain sentences separated by a blank line.

55 // - A sentence consists of one or tokens, each one starting on a new line.

56 // - A token consists of ten fields described in the table below.

57 // - Fields are separated by a single tab character.

58 // - All data files will contains these ten fields, although only the ID

59 // column is required to contain non-dummy (i.e. non-underscore) values.

60 // Data files should be UTF-8 encoded (Unicode).

61 //

62 // Fields:

63 // 1 ID: Token counter, starting at 1 for each new sentence and increasing

64 // by 1 for every new token.

65 // 2 FORM: Word form or punctuation symbol.

66 // 3 LEMMA: Lemma or stem.

67 // 4 CPOSTAG: Coarse-grained part-of-speech tag or category.

68 // 5 POSTAG: Fine-grained part-of-speech tag. Note that the same POS tag

69 // cannot appear with multiple coarse-grained POS tags.

70 // 6 FEATS: Unordered set of syntactic and/or morphological features.

71 // 7 HEAD: Head of the current token, which is either a value of ID or '0'.

72 // 8 DEPREL: Dependency relation to the HEAD.

73 // 9 PHEAD: Projective head of current token.

74 // 10 PDEPREL: Dependency relation to the PHEAD.

直接使用CoNLL table更易于被代码解析。如果需要CoNLL table输出,需要我们修改demo.sh。直接来个例子:

INFO:tensorflow:Processed 1 documents

1 What _ PRON WP _ 0 ROOT _ _

2 is _ VERB VBZ _ 1 cop _ _

3 a _ DET DT _ 5 det _ _

4 control _ NOUN NN _ 5 nn _ _

5 panel _ NOUN NN _ 1 nsubj _ _

这是对What is a control panel的输出。下图源于Inside Google SyntaxNet

CoNLL table中的所有tag缩写的含义在这里Universal Dependency Relations

针对CPOSTAG & POSTAG,可参考

NLTK

NLTK is a leading platform for building Python programs to work with human language data. It provides easy-to-use interfaces to over 50 corpora and lexical resources such as WordNet, along with a suite of text processing libraries for classification, tokenization, stemming, tagging, parsing, and semantic reasoning, wrappers for industrial-strength NLP libraries, and an active discussion forum.

我的想法是可以用NLTK来对SyntaxNet的输出做进一步Stemming/Lemmarization处理。

NLTK vs. spaCy

Stemming vs. Lemmatization

这两个词总是一起出现,作用很相像。区别是:

Lemmatisation is closely related to stemming. The difference is that a stemmer operates on a single word without knowledge of the context, and therefore cannot discriminate between words which have different meanings depending on part of speech. However, stemmers are typically easier to implement and run faster, and the reduced accuracy may not matter for some applications.

In computational linguistics, lemmatisation is the algorithmic process of determining the lemma for a given word. Since the process may involve complex tasks such as understanding context and determining the part of speech of a word in a sentence (requiring, for example, knowledge of the grammar of a language) it can be a hard task to implement a lemmatiser for a new language.

简而言之,就是Lemmarization是包含有上下文含义的,而Stemming只是对单个单词进行映射。

NLTK支持多种Stemmer,包括但不限于 Porter stemmer, Lancaster Stemmer, Snowball Stemmer。

>>> from nltk.stem import SnowballStemmer

>>> snowball_stemmer = SnowballStemmer(“english”)

>>> snowball_stemmer.stem(‘maximum’)

u’maximum’

>>> snowball_stemmer.stem(‘presumably’)

u’presum’

>>> snowball_stemmer.stem(‘multiply’)

u’multipli’

NLTK中的Lemarize:

>>> from nltk.stem import WordNetLemmatizer

>>> wordnet_lemmatizer = WordNetLemmatizer()

>>> wordnet_lemmatizer.lemmatize(‘dogs’)

u’dog’

>>> wordnet_lemmatizer.lemmatize(‘churches’)

u’church’

>>> wordnet_lemmatizer.lemmatize(‘is’, pos=’v’)

u’be’

>>> wordnet_lemmatizer.lemmatize(‘are’, pos=’v’)

u’be’

>>>

- pos = Part Of Speech